Discover more from Fear of a Microbial Planet

The Problem With Science is Scientists

A perfect method is complicated by flawed, human practitioners.

Five years ago astrophysicist and science communicator Neil deGrasse Tyson tweeted a very memorable and quote-worthy tweet:

Tyson’s ideal world appealed to many people fatigued from emotion-driven, knee jerk politics and political tribal warfare that had invaded every arena of public life, including science. It appealed to many of his fellow scientists, people trained to think objectively and test hypotheses based on observations about the natural world.

The only problem—the huge weight of evidence demonstrates why the virtual country Rationalia just plain ain’t ever gonna happen.

That’s because for humans, thinking rationally takes a tremendous amount of energy and effort. As a result, most of the time we don’t bother. Instead, the vast majority of our thinking is guided completely by our intuition—our instincts alone with none of that pesky interfering rational thought.

This dichotomy is masterfully explained in exquisite detail by Nobel Laureate Daniel Kahneman in his book Thinking Fast and Slow, and covered with a focus on political divisions in Jonathan Haidt’s masterpiece The Righteous Mind. Both are fantastic works in their own right, and provide fascinating explanations for why people have different views and why it is so difficult to change them.

More importantly, this cognitive dichotomy applies to everyone, even scientists. That may be surprising to some (including some scientists, apparently), as the media and politicians have portrayed scientists (at least the ones they agree with) as imbued with a magical ability to discern and pronounce absolute truth.

This couldn’t be further from reality. I often tell people that the difference between a scientist and the average person is that a scientist is more aware of what he/she doesn’t know about their specific field, whereas the average person doesn’t know what they don’t know. In other words, everyone suffers from crushing ignorance, but scientists are (one hopes) usually more aware of the depth of theirs. They might occasionally have an idea about how to slightly increase a particular body of knowledge, and sometimes that idea might even prove successful. But for the most part they spend their time thinking about a deep chasm of knowledge specific to their field.

Scientists are often hindered by their own years of experience and the potentially misleading intuition that has developed as a result. In the book Virus Hunter, authors C.J. Peters and Mark Olshaker tell how a former CDC director remarked that “young, inexperienced EIS (Epidemic Intelligence Service) officers CDC customarily sent out to investigate mystery disease outbreaks and epidemics actually had some advantage over their more experienced and seasoned elders. While having first-rate training and the support of the entire CDC organization, they hadn’t seen enough to have preset opinions and might therefore have been more open to new possibilities and had the energy to pursue them.” Experts are also terrible at making predictions, and as explained by researcher and author Philip Tetlock in his book Expert Political Judgement, they are no more accurate at forecasting than the average person. The more recent failures of pandemic prediction models have only strengthened this conclusion.

Most successful scientists can trace their crowning achievements to work that occurred early in their careers. This happens, not only because scientists get more job security, but because they get hampered by their own experiences and biases. When I was a lab technician in the late 90s, I remember asking an immunologist for his advice on an experiment I was planning. He ended up giving me a bunch of reasons why there wasn’t any good way to do that experiment and get useful information. I told a postdoc about this encounter, and I recall her saying “Don’t listen to him. That guy can talk you out of doing anything”. Experienced scientists are acutely aware of what doesn’t work, and that can result in an unwillingness to take risks.

Scientists operate in a highly competitive environment where they are forced to spend most of their time seeking research funding by endless grant writing, the vast majority of which are unfunded. To be competitive for this limited pool, researchers put the most positive spin on their work, and publish their most positive results. Even if the study veers off-track from what was originally planned, the resulting manuscript rarely reads that way. And these pressures often result in data analysis falling into an error-prone spectrum from more innocently emphasizing positive results to ignoring negative or contrary data to outright fabrication. Detailed examples of this are given by author Stuart Ritchie in his book Science Fictions: How Fraud, Bias, Negligence, and Hype Undermine the Search for Truth. Not only does Ritchie explain how science gets distorted by pressures for recognition and funding by well-meaning scientists, he gets into gory details about some of the most prolific fraudsters. Another excellent resource that covers scientific errors and research malfeasance is the website Retraction Watch. The sheer numbers of retracted papers, many by the same scientists, highlight the importance of documenting and attacking scientific fraud.

The problems with research data reporting and replicability have been known for years. In 2005, Stanford Professor John Ioannidis, among the most highly cited scientists, published one of the most highly cited articles (over 1,600), Why Most Published Research Findings are False. In the study, Ioannidis used mathematical simulations to show “that for most study designs and settings, it is more likely for a research claim to be false than true. Moreover, for many current scientific fields, claimed research findings may often be simply accurate measures of the prevailing bias.” Ioannidis also offered six corollaries derived from his conclusions:

The smaller the studies conducted in a scientific field, the less likely the research findings are to be true.

The smaller the effect sizes in a scientific field, the less likely the research findings are to be true.

The greater the number and the lesser the selection of tested relationships in a scientific field, the less likely the research findings are to be true.

The greater the flexibility in designs, definitions, outcomes, and analytical modes in a scientific field, the less likely the research findings are to be true.

The greater the financial and other interests and prejudices in a scientific field, the less likely the research findings are to be true.

The hotter a scientific field (with more scientific teams involved), the less likely the research findings are to be true.

If you look at the list carefully, 5 and 6 should jump out and scream at you. Here’s a closer look:

“Corollary 5: The greater the financial and other interests and prejudices in a scientific field, the less likely the research findings are to be true. Conflicts of interest and prejudice may increase bias, u. Conflicts of interest are very common in biomedical research [26], and typically they are inadequately and sparsely reported [26,27]. Prejudice may not necessarily have financial roots. Scientists in a given field may be prejudiced purely because of their belief in a scientific theory or commitment to their own findings (emphasis mine). Many otherwise seemingly independent, university-based studies may be conducted for no other reason than to give physicians and researchers qualifications for promotion or tenure. Such nonfinancial conflicts may also lead to distorted reported results and interpretations. Prestigious investigators may suppress via the peer review process the appearance and dissemination of findings that refute their findings, thus condemning their field to perpetuate false dogma. Empirical evidence on expert opinion shows that it is extremely unreliable [28].”

“Corollary 6: The hotter a scientific field (with more scientific teams involved), the less likely the research findings are to be true. This seemingly paradoxical corollary follows because, as stated above, the PPV (positive predictive value) of isolated findings decreases when many teams of investigators are involved in the same field. This may explain why we occasionally see major excitement followed rapidly by severe disappointments in fields that draw wide attention. With many teams working on the same field and with massive experimental data being produced, timing is of the essence in beating competition. Thus, each team may prioritize on pursuing and disseminating its most impressive “positive” results…”

Scientists predjudiced because of their beliefs, motivated by the “heat” of the field, and thus prioritizing positive results are all blazingly obvious sources of bias in SARS-CoV-2 research. Ioannidis and colleagues have published on the explosion of published SARS-CoV-2 research, noting “210,863 papers as relevant to COVID-19, which accounts for 3.7% of the 5,728,015 papers across all science published and indexed in Scopus in the period 1 January 2020 until 1 August 2021.” Authors of COVID-19-related articles were experts in almost every field, including “fisheries, ornithology, entomology or architecture”. By the end of 2020, Ioannidis wrote, “only automobile engineering didn’t have scientists publishing on COVID-19. By early 2021, the automobile engineers had their say, too.” Others have also commented on the “covidization” of research, highlighting the reduction of quality of research as COVID mania drove researchers from unrelated fields towards the hottest and most lucrative game in town.

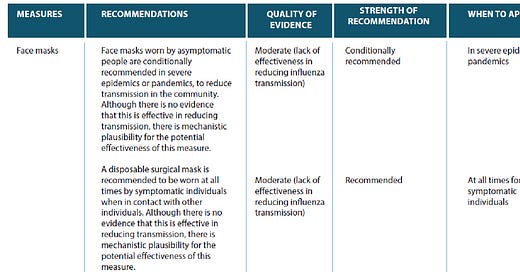

As I discussed in two previous posts, universal masking and reporting about COVID harms to children have been irredeemably politicized and distorted due to the rampant biases of media outlets, politicians, scientists, and public health organizations. But the real culprit may be the public itself, and the first-world, zero-risk safety culture that has encouraged all of these players to exaggerate harms to force behavioral changes in the noncompliant. Furthermore, most compliant people who are “taking the pandemic seriously” want to know that all the sacrifices they have made have been worth it.

However, scientists and media outlets are more than happy to deliver:

“Imagine if you were a scientist, and knew that a favorable conclusion of your study would lead to instant recognition by The New York Times, CNN and other international outlets, while an unfavorable result would lead to withering criticism from your peers, personal attacks and censorship on social media, and difficulty publishing your results. How would anyone respond to that?”

The answer is obvious. The overwhelming desire of a terrified public for evidence of interventions that effectively eliminate the risk of infection will inevitably pressure scientists to provide that evidence. Ideally, an acknowledgement of this bias would result in increased skepticism from other scientists and media outlets, but that hasn’t happened. Exaggerated claims of efficacy of interventions and exaggerated harms to promote their acceptance have become the norm in pandemic reporting.

As I discussed in the previous post, the best way to mitigate research bias is for investigators to invite neutral partners to replicate work and collaborate on additional studies. The ability to make all data available to the public and other scientists also invites critical reviews that are crowd-sourced and thus potentially more accurate and less biased. Public availability of datasets and documents has resulted in the improvement of pandemic forecasting and has brought the possibility of a lab-leak origin for SARS-CoV-2 out of conspiracy-theory shadows and into public light.

As a result of open data and transparent documentation, others have complained that these resources have been misused by armchair scientists or scientists engaging in epistemic trespassing outside of their respective fields, resulting in a huge, confusing pile of misleading information. Yet, even if the process of science is confined only to “experts”, the vast majority of studies produce very little valuable or accurate information to other researchers or the public at large. Only through a harsh natural selection and peer replication process do the best ideas survive beyond their initial hype. It’s also important to note that groups of researchers in a particular field can be so paralyzed by internal and political biases and toxic groupthink that only those outside of their field are able to call attention to the problem. Therefore, the ability of other scientists and the public to aid in the long-term, corrective process of science is the best way to get closer to the truth, despite our collective flaws.

Subscribe to Fear of a Microbial Planet

Fighting back against a germophobic safety culture.

An excellently organized critique. I would go even further in some spots -

"In other words, everyone suffers from crushing ignorance, but scientists are (one hopes) usually more aware of the depth of theirs."

The lay person knows they do not understand how, for example, the immune system works. They would not profess to be "certain" that a novel vaccine is safe in advance of testing "because X." It is the scientist (and physician even more-so) who is more often trapped in the Dunning–Kruger illusion of certainty.

Natural human psychology ensures that the overconfident outlier gets to dictate the memes of belief, or make a fortune peddling false cures - but they are still the outlier. Scientific education is a machine for making overconfidence the norm - and the outliers who do remain open-minded are, as you say, gradually ossified by the need to sustain and perpetuate their earliest insights. I don't think rationalism is actually a better guard against biases than intuitive, emotional thought - maintaining openness in both requires training, that is all.

Modern science, like 19th-Century Russian aristocratic politics, needs a Dostoyevski to novelize its psychological and spiritual rot one day.

"Most successful scientists can trace their crowning achievements to work that occurred early in their careers. This happens, not only because scientists get more job security, but because they get hampered by their own experiences and biases."

This applies equally to economists, historians, and philosophers.

An obsession with certainty - immediate certainty - is a strong emotional and psychological force. It causes thinkers to rationalize prior positions. And the smarter the rationalizer, the "better" (more complex and intricate) the rationalization.